WEB APPS PERFORMANCE

HOW TO OPTIMIZE AND SPEED UP YOUR WEB APPS

Web Apps Performance

How To Optimize And Speed Up Your Web Apps

When making a high-performance web app, we need to think about a number of things to make sure it loads quickly, runs smoothly, and is easy to use. For example, the performance of a web app is affected a lot by how well the graphics and network are optimized, how long it takes for a page to load, and how long JavaScript runs.

The content of this white paper aims to help everyone boost the performance of their web application based on major frameworks like Angular, React, Vue, and others. Everyone, from beginners to experts, can use the tips shared in this white paper for their existing or future web applications.

Topic Overview

This white paper will cover a wide range of topics but will focus on four main sections. These are, in order:

- Graphics optimization

- Network optimization

- Improving page loading

- Improving JavaScript runtime

Each section also consists of a set of tips that you can easily apply to your projects.

Graphics Optimization

The image was taken as a screenshot from Filestack.

In our digital world, visual content like images is an integral part of a web application. Images are one of the most common types of content because they make the online experience better and get people more interested. Images, however, should be properly optimized and responsive across different devices. Unoptimized images, such as images that are very large in size, can slow down the web application and drive customers away. For this reason, it’s crucial to focus on graphics optimization right from the beginning of your project.

When it comes to images for the web, the most common image file formats are JPEG, PNG, and GIF. These are all raster images that use a series of pixels or individual blocks with color and tonal information to form the whole image.

Here’s how you can optimize images for your web application to boost performance:

Optimize Image Pixel Resolution

The quality of raster images depends on the resolution, which is essentially the number of pixels an image is made up of or the number of pixels displayed per inch. The higher the number of pixels in an image, the better the image resolution and quality. For example, if we stretch the pixels, they get messed up, which makes the image unclear and blurry.

Using the right number of pixels for an image may be the easiest way, especially for experienced developers, to optimize a web image. On the other hand, web developers who are just starting out often make the mistake of using very large images in order to maintain the quality of the images.

For example, if the template provided by the designer shows the image needs to be 500 pixels in width and 300 pixels in height, using an image that is 5000 pixels in width and 3000 pixels in height could cause huge performance issues. Not only will the image be ten times bigger, but it’ll also take a lot of time to load. Additionally, there won’t be any significant difference in image quality. That said, there are some rare cases when we need to use images bigger than their actual size.

Resolution significantly affects image quality. However, using images larger than required can cause performance issues.

Unset

<!-- Instead of using a larger image like this: --> <img src="large-image.jpg" width="500" height="300"> <!-- Use an image of the appropriate size: --> <img src="optimal-image.jpg" width="500" height="300">

The bottom line is ‘Don’t Use Images Larger Than The Required Size.’ i.e Always use images with the correct resolution.

With a single command, developers can easily reduce the number of pixels in an image to the right size. For example, with Filestack, you just need to call the resize task:

https://cdn.filestackcontent.com/resize/=width: 800, height: 800, fit: scale/HANDLE

With the Filestack API, developers can manipulate the width and height of an image and change the image’s fit and alignment as well.

Minimize Image Size Using Tools

Beyond pixel resolution, you will also have to focus on reducing the image size in megabytes or kilobytes. The main goal is to reduce the size of the image file while keeping the image quality at a good level. There are a few things we can take out of an image to make it smaller without losing quality. Take, for example, image metadata. Image metadata is the data that tells you about the rights to the image and how it is managed, like who made it, how many pixels it has, and when it was made. Most website users don’t care about metadata, and getting rid of it can help you get the right size image with good quality.

If you’re using a JavaScript bundler like Webpack.js, you can use plugins such as ImageMinimizerWebpackPlugin. One of the easier ways for developers to reduce image file size with just a single command is to use the Filestack API:

https://cdn.filestackcontent.com/resize=w:1000/compress/6fWKQk3Tq2numL2dACWw

The above command will first resize the width of the image and then compress it. We could also compress the image without resizing it, but that would reduce the image size randomly.

Lazy Load Images

Another way to optimize images is to load images lazily. Lazy loading delays the loading of images until the user needs them. In other words, images are not loaded right away; rather, they are loaded later. This helps render the web page quickly as there are fewer bytes to download. All modern browsers support lazy image loading.

Image lazy loading can be achieved by using the loading attribute for the image tag:

Unset

<!-- Instead of eagerly loading an image like this: -->

<img src="image.jpg">

<!-- Use the 'loading' attribute to lazily load the image: -->

<img src="image.jpg" loading="lazy">

However, we shouldn’t do this for every image – avoid using lazy loading for images that need to be loaded immediately when the web page loads. If not used properly, it can actually affect the performance of the website negatively.

Key takeaway: Implement lazy loading for images that aren’t needed immediately when the webpage loads.

Using SVG Images (where possible)

Developed by W3C for the web, SVG stands for Scalable Vector Graphics. It uses XML/vector data instead of pixels to create text, shapes, or illustrations. The purpose of the SVG image file format is to render 2D images within web browsers. Unlike raster graphics, SVG images can be scaled as much as needed without losing image quality. Additionally, all major browsers support this format. But SVG isn’t good for pictures with a lot of small details or complicated drawings. Instead, it should be used for simple pictures, logos, icons, etc. SVG files can even be animated.

In terms of optimization, with SVG, if you want the same icon in different colors, you can simply use CSS to change the color and load only one icon. In contrast, if you’re using PNG images, you would have to load two icons. However, sometimes using some other web image format, like PNG, is a better option than SVG. For example, if there is no need to scale the image and the PNG file is smaller in size than SVG, you should use PNG.

SVG images don’t lose quality when scaled, which makes them perfect for logos and simple illustrations.

Unset

<!-- Instead of a raster image for a logo: -->

<img src="logo.png">

<!-- Use SVG for sharper results and easy manipulation with CSS: -->

<svg id="logo" xmlns="http://www.w3.org/2000/svg">...</svg>

<!-- In your CSS, you can easily change SVG colors -->

<style>

#logo { fill: #f00; /* red color */ }

</style>

Key takeaway: Use SVG for simple images and illustrations that need to be scaled without losing quality.

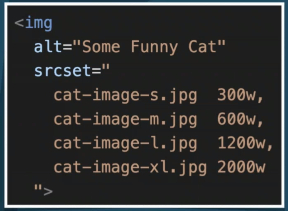

Using srcset Attribute To Load Images

As the trend toward responsive websites has increased drastically, it’s essential to ensure the images on websites or web apps are also responsive. This means images should be displayed according to the size of the user’s device, such as a desktop, mobile device, tablet, or TV. This is where the srcset attribute is helpful. It helps define which image version to load depending on the screen size. For instance, say there is a banner on the main page that is 1600 px in width and 800 px in height; it’s best to load it only if the user accesses the web page from a laptop or desktop, or we could use another variant of the image that is resized for smaller devices. Doing this will also decrease the page loading time, thereby improving performance.

Here is an example of how srcset can be used to define different versions (pixel sizes) of an image:

Using A Library To Generate Image srcset

Using a library like Filestack adaptive can help you make responsive HTML image elements based on Filestack image conversions. Filestack adaptive makes a srcset with different versions of the image for different devices with just a few lines of code. That’s why many developers use adaptive to save time and effort and focus on other parts of web development.

Here’s how you can use Filestack adaptive:

Unset

<script

src="https://static.filestackapi.com/adaptive/1.4.0/adaptive.min.js"

crossorigin="anonymous"></script>

<script>

const options = {

alt: 'windsurfer',

sizes: {

fallback: '60vw',

}

};

const el = fsAdaptive.picture(FILESTACK_HANDLE, options);

document.body.appendChild(el);

</script>

The above will yield the following results:

<picture>

<img src="https://cdn.filestackcontent.com/5aYkEQJSQCmYShsoCnZN"

srcset="

https://cdn.filestackcontent.com/resize=width:180/5aYkEQJSQCmYShsoCnZN

180w,

https://cdn.filestackcontent.com/resize=width:360/5aYkEQJSQCmYShsoCnZN

360w,

https://cdn.filestackcontent.com/resize=width:540/5aYkEQJSQCmYShsoCnZN

540w,

https://cdn.filestackcontent.com/resize=width:720/5aYkEQJSQCmYShsoCnZN

720w,

https://cdn.filestackcontent.com/resize=width:900/5aYkEQJSQCmYShsoCnZN

900w,

https://cdn.filestackcontent.com/resize=width:1080/5aYkEQJSQCmYShsoCnZN

1080w,

8

https://cdn.filestackcontent.com/resize=width:1296/5aYkEQJSQCmYShsoCnZN

1296w,

https://cdn.filestackcontent.com/resize=width:1512/5aYkEQJSQCmYShsoCnZN

1512w,

https://cdn.filestackcontent.com/resize=width:1728/5aYkEQJSQCmYShsoCnZN

1728w,

https://cdn.filestackcontent.com/resize=width:1944/5aYkEQJSQCmYShsoCnZN

1944w,

https://cdn.filestackcontent.com/resize=width:2160/5aYkEQJSQCmYShsoCnZN

2160w,

https://cdn.filestackcontent.com/resize=width:2376/5aYkEQJSQCmYShsoCnZN

2376w,

https://cdn.filestackcontent.com/resize=width:2592/5aYkEQJSQCmYShsoCnZN

2592w,

https://cdn.filestackcontent.com/resize=width:2808/5aYkEQJSQCmYShsoCnZN

2808w,

https://cdn.filestackcontent.com/resize=width:3024/5aYkEQJSQCmYShsoCnZN

3024w”

alt=”photo_01″

sizes=”60vw”>

</picture>

Network Optimization

Now that you have considered graphics optimization, let’s look at network optimization, which is another critical aspect of improving web application performance. Network optimization helps deliver images and other web assets quickly on the client side and speeds up page loading time.

Reduce The Number Of HTTP Requests

HTTP is the acronym for Hypertext Transfer Protocol, which is essentially a set of rules that allow for communication between web servers and clients. When a user accesses a web page, lots of technical stuff happens on the server side to successfully display the content on the client side. The browser needs to make a whole bunch of requests called HTTP requests and then receive HTTP responses. For example, when the client (the browser) asks for an HTML page, the server sends back an HTML file. In the same way, when the browser asks for an image, the server sends back an image file

In other words, HTTP requests allow files like images, text, video, audio, and other multimedia files to be sent over the web. The number of HTTP requests has a notable impact on web page loading time. Typically, fewer HTTP requests result in a faster loading web page or web application.

Here are some effective ways to minimize the number of HTTP requests:

- Inspect your web page for irrelevant assets and remove them. These can include unnecessary images, such as those used on web pages that don’t exist anymore.

- Combining all your CSS and JavaScript files into one single bundle also proves to be quite helpful in reducing HTTP requests.

- Smartly design your backend API in a way that requires a minimum number of HTTP calls to fetch the data.

- Another useful option to consider is GraphQL, a query language for APIs that empowers clients to request exactly the data they need in one single request. However, using GraphQL requires special attention, especially if you haven’t worked with it before, because it can significantly dent your web app’s performance if not used correctly.

Every HTTP request introduces additional latency in your application. Hence, it is crucial to minimize these requests.

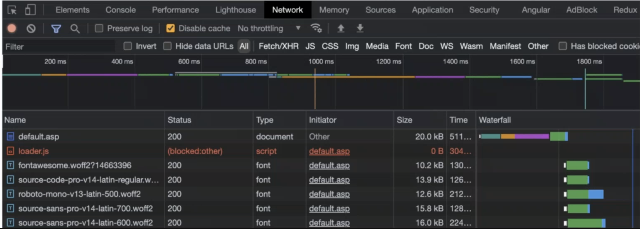

Use Chrome DevTools to analyze the number of HTTP requests:

In the ‘Network’ tab of Chrome DevTools, you can analyze all the HTTP requests your web page is making.

In the ‘Network’ tab of Chrome DevTools, you can analyze all the HTTP requests your web page is making.

Minimize HTTP requests by bundling your assets:

If you’re using a tool like Webpack, you can combine all your CSS and JS files into a single bundle, reducing the number of requests.

Unset

// Webpack config example

const path = require('path');

module.exports = {

entry: './src/index.js',

output: {

filename: 'bundle.js',

path: path.resolve(__dirname, 'dist'),

},

};Smartly design your backend APIs:

Design your backend APIs to return all the required data in a single request. For complex data needs, consider using a tool like GraphQL which allows the client to specify exactly what data they need.

Unset

// GraphQL example

const { ApolloServer, gql } = require('apollo-server');

const typeDefs = gql`

type Query {

user(id: ID!): User

}

type User {

id: ID!

name: String

posts: [Post]

}

type Post {

id: ID!

title: String

content: String

}

`;

// Your resolvers go here...

const server = new ApolloServer({ typeDefs, resolvers });

server.listen().then(({ url }) => console.log(`Server ready at ${url}`));Using HTTP 2.0 Protocol

HTTP/2 is a significant upgrade from HTTP 1.1 and introduces several enhancements that can boost your web app’s performance.

HTTP 1.1 has been the standard version of HTTP for more than 15 years, and it has connections that stay open to improve performance. This very version also formed the basis for standard HTTP requests: GET, PUT, HEAD, and POST. If HTTP 1.1 seemed sufficient before, then why did we need the HTTP 2.0 protocol?

The limitations of HTTP 1.1 were pretty evident on resource-intensive websites. It only used one outstanding request per TCP connection, which added a lot of extra work and made pages take longer to load. Google released the SPDY protocol in 2010 to solve this problem. Its main goal was to reduce latency by customizing the TCP pipeline and adding the necessary compression. Even though HTTP 2 was initially based on SPDY, it was later changed to include other unique features, such as a fixed header compression algorithm. HTTP 2 allows multiple requests and file transfers simultaneously. This means that when using HTTP 2, there is no need to combine CSS and JS files into a single file. All in all, HTTP 2 makes data transferring and data parsing more efficient.

In addition, HTTP 2.0 is easy to configure. Generally, you don’t need to handle the web server configuration, and even when you do, you simply need to add the HTTP 2 string to the listing property.

Benefits of HTTP/2 include:

- Multiplexing: HTTP/2 allows multiple requests and responses to be transferred simultaneously over a single connection. This makes it unnecessary to bundle your CSS and JS files.

- Header compression: HTTP/2 uses the HPACK compression format for efficient and secure compression of HTTP headers.

Unset

Configuring HTTP/2 with Node.js:

// Setting up an HTTP/2 server in Node.js

const http2 = require('node:http2');

const fs = require('node:fs');

const server = http2.createSecureServer({

key: fs.readFileSync('localhost-privkey.pem'),

cert: fs.readFileSync('localhost-cert.pem')

});

server.on('stream', (stream, headers) => {

stream.respond({

'content-type': 'text/html',

':status': 200

});

stream.end('<h1>Hello World</h1>');

});

server.listen(8443);The server created in the example above will serve a simple “Hello World” webpage over HTTP/2.

Remember, always make sure your optimization strategies align with your specific web app’s needs and use cases. Different apps have different needs, and no single optimization strategy will be ideal for every scenario.

Enabling GZip Compression

Compression, in general, means reducing the file size, and it can be lossy or lossless. In lossy compression, some of the information is lost when the file is compressed, but in lossless compression, all of the information from the original file is kept in the compressed file. GZip compression is yet another way to improve page load time. GZip is both a piece of software and a file format that is used to shrink HTTP content before sending it from the server to the client. It is currently the standard file compression format on the web. Before you send files over the network, they are compressed, and then the browser uncompresses them. This reduces the amount of data that needs to be sent between the server and the client.

Compression is a key strategy for network optimization as it helps to reduce the size of HTTP content transferred between the server and the client. GZip compression, a common choice for this purpose, can reduce file sizes by up to 80%.

Enabling GZip with Express.js:

In a Node.js application using Express, you can use the compression middleware to apply GZip compression to your responses.

Unset

const compression = require('compression')

const express = require('express')

const app = express()

app.use(compression())Optimizing Static File Caching

In the context of web development, caching refers to the temporary storage of copies of files known as “cache.” This temporary storage can be on the user’s browser or an intermediate server. Caching is known to reduce the time required to access a file. Additionally, caching also minimizes the number of requests from the server.

Browsers can cache as much as half of all the downloaded content, making it quicker for users to view the images, JS, and CSS files. This is because when files are cached, users access them from their systems instead of accessing them over the network.

Say, for instance, that you open a website, and the server returns HTML. When this HTML is parsed by the browser, it identifies that a CSS file needs to be loaded and sends the request for this file. The server sends back the file that was requested and tells the browser to store it for 15 days. Now, if you request another web page from the same website after a day, the HTML will again be parsed by the browser, and it’ll again identify the CSS file. This time, however, the browser already has the file available in the cache, so it doesn’t need to request it from the server over the network.

Caching helps minimize server requests by storing copies of files. When a file is cached, subsequent requests for the file can be fulfilled from the cache, reducing network usage.

Implement HTTP caching with Express.js:

Express.js can specify HTTP caching headers on responses to instruct browsers how long to cache the file.

Unset

app.use(express.static('public', {

maxAge: '30d', // Sets "Cache-Control: max-age=2592000000"

}));Leveraging Content Delivery Networks (CDNs)

CDN, which stands for “content delivery network,” is a group of servers that are spread out around the world so that content on the internet can be sent quickly. Most of us often use CDNs. For example, every time we access our social media feeds, read articles on the internet, shop online, or watch a YouTube video, we use a CDN. CDNs reduce latency problems and speed up the time it takes for a page to load by reducing the physical distance between the user and the hosting server of a website.

Basically, a CDN creates cached versions of the content and stores them in several geographical locations, known as points of presence (PoPs). Each PoP consists of multiple caching servers that deliver content to visitors within their proximity. In other words, a CDN stores your content at the same time in more than one place. This makes sure that your content is delivered quickly and smoothly. Say, for example, your website is hosted in the US. When someone from Spain accesses it, the content is quickly delivered via a local Spain POP.

Thankfully, efficient third-party providers like Filestack provide built-in CDNs. You can retrieve a file directly from its storage location by using the following command:

https://cdn.filestackcontent.com/cache=false/HANDLE

CDNs enhance delivery speeds by storing cached versions of your content in numerous geographical locations. This can significantly reduce latency and improve loading times.

Improving Page Loading

One of the most critical elements of website performance is how quickly your web page loads. Several studies have shown that a fast and properly optimized web application does a great deal to improve user engagement, user experience, and conversion rate. Take, for example, this research by Portent, which found that the conversion rate of a site (B2C eCommerce) that loads in 1 second is 2.5 times higher than a site that takes around 5 seconds to load. Additionally, the average conversion rate of web pages that load in 1 second is around 40%. In another study, as many as 90% of consumers admitted they left an online store/website due to slow loading time.

Also, how visible a website or web app is on Google depends on how quickly it loads. Loading time is one of the many factors that affect the ranking of a website on Google SERPs (Search Engine Result Pages.)

Here are some effective ways to improve page loading:

Reducing The Number Of Scripts

Usually, in enterprise-grade web apps or websites, there are several scripts that are loaded every time, but they aren’t actually being used. For example, say a developer added some scripts that aren’t used anymore, but the developer doesn’t work for the company anymore, so no one knows those scripts exist. That’s why it’s recommended to check scripts frequently and delete the ones that aren’t being used anymore. The same goes for third-party plugins and libraries.

Improving Page Load Times

Page loading time is a critical metric that impacts user engagement, user experience, and search engine rankings.

Reducing script load with webpack:

You can inspect your scripts using tools like webpack and remove any unused or unnecessary scripts.

Writing Code That Can Be Tree-Shanken

Tree-shaking refers to removing dead code from a bundle. It is usually done through tools like Webpack and Rollup. Basically, once you write code, the tool analyzes it and removes the dead code from it. Say, for instance, that you imported a function but didn’t call it anywhere in the code, so there is no need to include it in the final bundle. When you use the tree-shaking feature, it removes such code from the final bundle, making it lightweight. As a result, the website will load faster.

Below are some points to consider in order to write code that can be tree-shaken:

- Avoid CommonJS module imports using the require() function. This is because when you use the require() function, tools like Webpack cannot efficiently detect which code should be tree-shaken.

- Try to avoid side effects as much as possible because such code is difficult for tools like Webpack to tree-shake. A function is said to have side effects when it alters a global variable or some other variable that is out of the scope of the function.

Implementing Tree-Shaking:

Tree-shaking is a process to eliminate dead code from your bundles. You can enable it in your webpack configuration.

Unset

// webpack.config.js

module.exports = {

mode: 'production',

entry: {

app: './src/app.js'

},

output: {

path: path.resolve(__dirname, 'dist'),

filename: '[name].bundle.js'

},

};

Splitting Your Codebase Into Smaller Chunks And Lazy Load Them

One of the most important things you need to do to make high-performance web apps is to break your code or JS app into chunks that can be loaded as needed. So why do we need it? When you develop an enterprise-grade web app, the total size of the source code is huge. The browser first needs to download the big JS bundle file and then execute the code inside it.

For example, if your JS bundle file is 5 megabytes, the browser needs to download the whole file before it can run the code. This makes the page take a long time to load. This is where code-splitting comes in handy. It lets you divide the source code for your JS app into multiple bundles that the browser can download separately.

Typically, there is one main bundle and multiple supporting bundles called chunk files. These chunk files are loaded only when they are needed. Although the total size of the source code is the same, the smaller size of the main bundle improves the initial page load time, resulting in a better user experience.

The same goes for styles. For example, if you have two theme options (light and dark) and only one theme can be activated at a time, you can load the theme (CSS file) as requested by the user.

Utilizing Code-Splitting:

Large applications can benefit from code-splitting, where your codebase is broken down into smaller chunks that are loaded as needed. Webpack supports this out-of-the-box with dynamic import() syntax.

Unset

// This async function will load the module only when called.

async function loadComponent() {

const { default: component } = await import('./component.js');

return component;

}

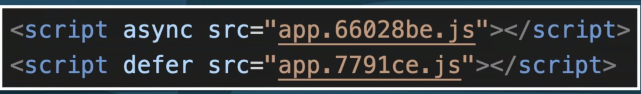

Applying Async, Defer, Preload, and Prefetch Attributes

When the browser loads HTML and identifies a <script> tag, it has to download and parse the entire <script>, and then it can continue reading the remaining HTML to build the DOM. As a result, the page rendering is blocked. One way to solve this problem is to put the script at the bottom of the page. However, this isn’t usually recommended because the browser will only load the script when it reaches the bottom of the page. A more efficient way is to use async and defer attributes.

Both async and defer attributes load JavaScript asynchronously and make the script nonblocking. However, there are a few differences between these two attributes. First, async runs right away and doesn’t care what order it runs in, while defer runs in order right before the DOMContentLoaded event. Also, in order to run, async may stop the page from rendering its content, but defer doesn’t do that.

Similarly, you can also improve asset loading using preload and prefetch strategies. For example, you can preload and prefetch some fonts, CSS, etc. Preload tells browsers to load an asset immediately when the page is requested instead of during the parsing. However, the assets are only loaded, not activated. On the other hand, prefetch is mostly used when you need a font or style later, such as for the next page.

You can also enhance your asset loading by utilizing preload and prefetch. preload is for loading crucial resources that are needed during the current navigation, while prefetch is for loading resources that might be needed for future navigations.

Unset

<link rel="preload" href="style.css" as="style">

<link rel="prefetch" href="next-page.html">

Optimizing JavaScript Runtime

JavaScript runtime refers to the environment where your code will be executed when you run it. It identifies the global objects that your code can access and also impacts how it runs.

Here is how you can improve the JavaScript runtime:

Using WebWorkers:

Using WebWorkers is a good choice when you need to do heavy computations that can block the main thread. You can use WebWorkers to do the computation. This can improve performance when dealing with heavy computations.

Unset

let worker = new Worker('worker.js');

worker.postMessage('Hello, worker');Using ServiceWorkers:

You can use ServiceWorkers to cache static files or perform other tasks related to networking. For instance, you can manipulate networking to enable an offline experience improving both performance and offline capabilities of your application.

Unset

if ('serviceWorker' in navigator) {

navigator.serviceWorker.register('/sw.js');

}Using Memoization Techniques:

Memoization means caching or storing the results of expensive function calls. These calls are returned when the same function is called again. Memoization techniques are known to speed up web applications. You can use libraries like loadash.memoize, fast-memoize.js, etc. to implement memoization techniques.

Memory Leaks:

It’s important to avoid memory leaks because they can make the user’s experience less optimal.

Leveraging the Intersection Observer API: It’s another impressive feature that allows you to track when some target elements enter the view part. It is helpful for the lazy loading of images, deciding when to perform specific tasks or animation processes, etc.

Unset

let observer = new IntersectionObserver(entries => {

entries.forEach(entry => {

if (entry.isIntersecting) {

// Do something

}

});

});

observer.observe(document.querySelector(‘.target’));

Tools To Analyze The Performance Of Web Applications Chrome DevTools

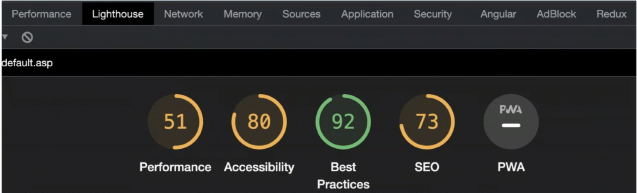

Lighthouse tab

The Lighthouse tab in the Google Chrome Devtools is a pretty useful feature that gives you a list of parameters (along with scores) that are crucial for improving page performance and making it more accessible. The parameters include performance, accessibility, SEO, and more.

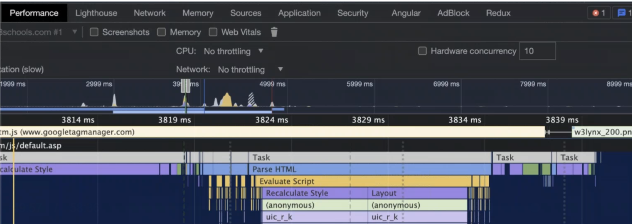

Performance Tab

The performance tab is helpful in analyzing the JavaScript runtime performance.

Network Tab

This tool is helpful in analyzing the HTTP requests in your application. You can use it to see if there are any duplicate requests, how much time a request takes to load, and more.

Conclusion

In this whitepaper, we’ve talked about a number of techniques, tips, and tricks for optimizing graphics, optimizing networks, improving the speed at which pages load, and improving the speed at which JavaScript runs. All in all, these tips can boost the performance of your web application. But building a website is a complicated process with many complicated steps, and using all of these tricks can take a lot of time. However, you don’t always have to reinvent the wheel. Thankfully, there are tools available on the market that can help you perform different tasks related to web development quickly and efficiently.

For instance, web apps, especially social media apps, need to be able to allow users to upload files, such as videos, images, and audio files. Creating a file uploader manually can take a lot of time. On the other hand, you can use an efficient third-party file uploader like Filestack.

Filestack has a full-featured interface for uploading that lets you choose your own upload sources, drag and drop files, and more. We also have features at Filestack that let you change your images, like resizing, cropping, compressing, and more. Furthermore, we offer a fully supported CDN and machine learning tools such as OCR. Filestack also integrates easily with major cloud storage providers like Azure, AWS, and Google Cloud.

You can explore more features at Filestack.com.

WEB APPS PERFORMANCE

HOW TO OPTIMIZE AND SPEED UP YOUR WEB APPS